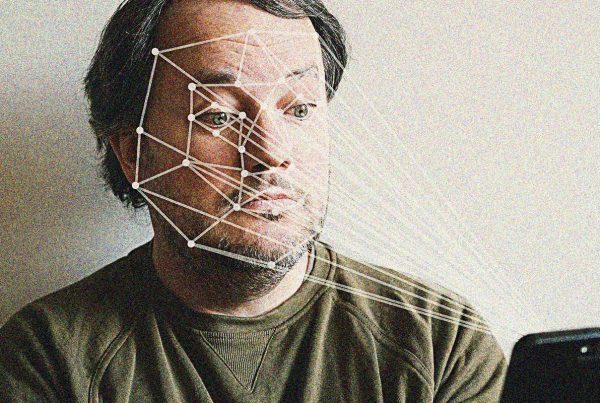

Facial recognition uses the physical characteristics of our face to create a mathematical model that is unique to us, just like a fingerprint. At CCLA, we think it’s better to call it “facial fingerprinting” rather than recognition, because it gives a more accurate impression of what we’re talking about: an identifier inextricably linked with our body. In a sense, it is an extreme form of carding, because it renders all of us walking ID cards.

Facial recognition runs the risk of annihilating privacy in public. Imagine if someone was able to identify your name, address, place of work, friend group, or many other private factors simply by taking a picture of you in public and running it through a database. While this may sound like the talk of a conspiracy theorist, private companies, such as Clearview AI, have already made this possible by collecting billions of photographs from the internet and social media platforms, then giving access to anyone who pays for a subscription. These companies and their subscribers can then track our consumer behaviour, politicians can use our data to influence decision making, strangers know where you live and work.