In the wake of the live streaming of the massacres in Christchurch, New Zealand, Canada has joined many other nations in answering the “Christchurch call” and vowing to eliminate violent extremist and terrorist content online. But what does the proposed “Digital Charter” mean for people in Canada and our civil liberties? At the moment, the Charter appears to be entirely aspirational: we have a list of principles the government has announced but have no sense of whether, how or when those principles will be embedded in law, policy or practice.

Of the 10 Charter principles, at least one – if implemented into an enforceable law – will have a direct and significant impact on the content that Canadians can create, disseminate and access online. In other words, a very real impact on our freedom of expression which, it’s worth remembering, is protected in our Canadian Charter of Rights and Freedoms. That is a real – not aspirational – Charter with the full force of the Constitution, Canada’s supreme law. The government has said, “Canadians can expect that digital platforms will not foster or disseminate hate, violent extremism or criminal content.” On its own, a principle which sets out an “expectation” for what privately owned platforms will do has little weight, but one of the other principles promises “clear and meaningful penalties” for violations of law and regulations to support the principles.

This principle has me worried. How do we deal with hate and extremism without capturing the merely unpopular or offensive? Don’t get me wrong: I don’t spend time on neo-Nazi sites or seek out graphic acts of violence on video streaming platforms. I don’t like this kind of content and actively avoid it. But I worry about broad rules which “outlaw” some types of content and what this means for a democracy where free expression is supposed to be a fundamental freedom. Regulating expression is notoriously tricky. The sheer volume of content online and the Internet’s fundamentally global character only add to the challenges.

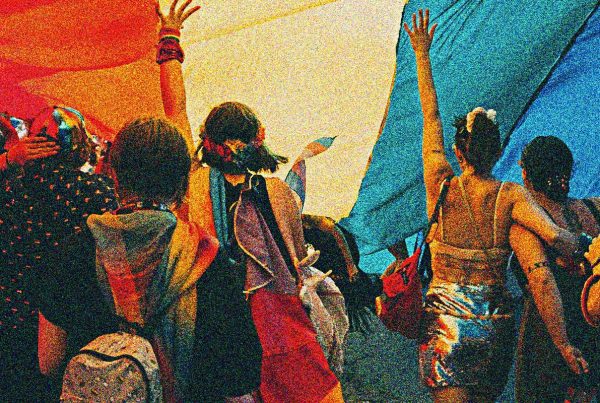

I need to know more about the kind of “violent extremism” from which Canadians can expect to be shielded. It is appalling that the massacre in Christchurch was live streamed using a social media platform, but is there a way to address that problem without also censoring other content which might have significant social value? Think of repressed minorities who suffer violence at the hands of the state. Live streaming those acts of violence might bring the world’s attention to an important issue. Consider also the impact of live streaming videos which have captured horrific acts of police brutality. Video can be an important means of holding the powerful to account. Does the government get to decide who can stream content live? Does Facebook? Should we let an algorithm determine who can be trusted to stream?

What does the government mean when it talks about “fostering or disseminating hate”. The legal definition of “hate speech” is quite narrow, and for good reason. But when most people use the term, it’s not that narrow definition they have in mind, or expect to be enforced. Our Criminal Code prohibition on hate speech (s. 319) has been held to be constitutional by the Supreme Court because it is supposed to only capture the most extreme kind of content. Even so, the legal definition is open to varying interpretations, and courts and judges frequently disagree about whether a given piece of content crosses the line. When does harsh criticism of Israel become anti-Semitism? When do strong statements of religious beliefs about the “proper” definition of marriage become hate propaganda targeting the LGBTQ community? Is the Digital Charter going to place these decisions in the hands of private platforms? If so, will those platforms be punished if, in the eyes of the government, they make the wrong call? If the answer is yes, they will certainly err on the side of censorship rather than free expression. And if dissemination is relatively clear, what does it mean to “foster” hate? Will platforms be expected to interfere in how online networks form to ensure like-minded bigots can’t find each other? If the goal of social media is to help connect people, are we now saying that some people really do need to be isolated? Our constitutionally protected freedom to associate is protected by the same Constitution which safeguards freedom of expression.

Finally, is the principle’s reference to “criminal content” a separate category, or are hate and violent extremism sub-categories of this broader theme? Are platforms responsible for deciding if content is criminal or will they only be expected to remove something which has already been the subject of a criminal conviction? State censorship is dangerous because we never know when our views, opinions or content may be deemed too offensive or harmful (or simply on the wrong end of the political spectrum) for public dissemination. Outsourcing censorship to a corporate entity accountable only to its shareholders is at least as dangerous.

With an election coming up in a few short months, the aspirational Digital Charter may make for talking points with little substance. Nevertheless, it is good to put this issue on the agenda. It is worth having a serious think about how to reconcile a strong commitment to free expression with a commitment or desire to deal with extremism online. And, when we pick our next elected representative, we should at least understand how they feel about free expression, and what they intend to do to protect and promote this right in the digital public square.

About the Canadian Civil Liberties Association

The CCLA is an independent, non-profit organization with supporters from across the country. Founded in 1964, the CCLA is a national human rights organization committed to defending the rights, dignity, safety, and freedoms of all people in Canada.

For the Media

For further comments, please contact us at media@ccla.org.